Aligned to What?

On the incoherence of "aligning" systems

A central premise of artificial intelligence safety research is that AI systems should be designed in such a way that they are aligned with human values. If these systems are not aligned in this manner, then the imagined future artificial general intelligence/artificial superintelligence would kill us all.

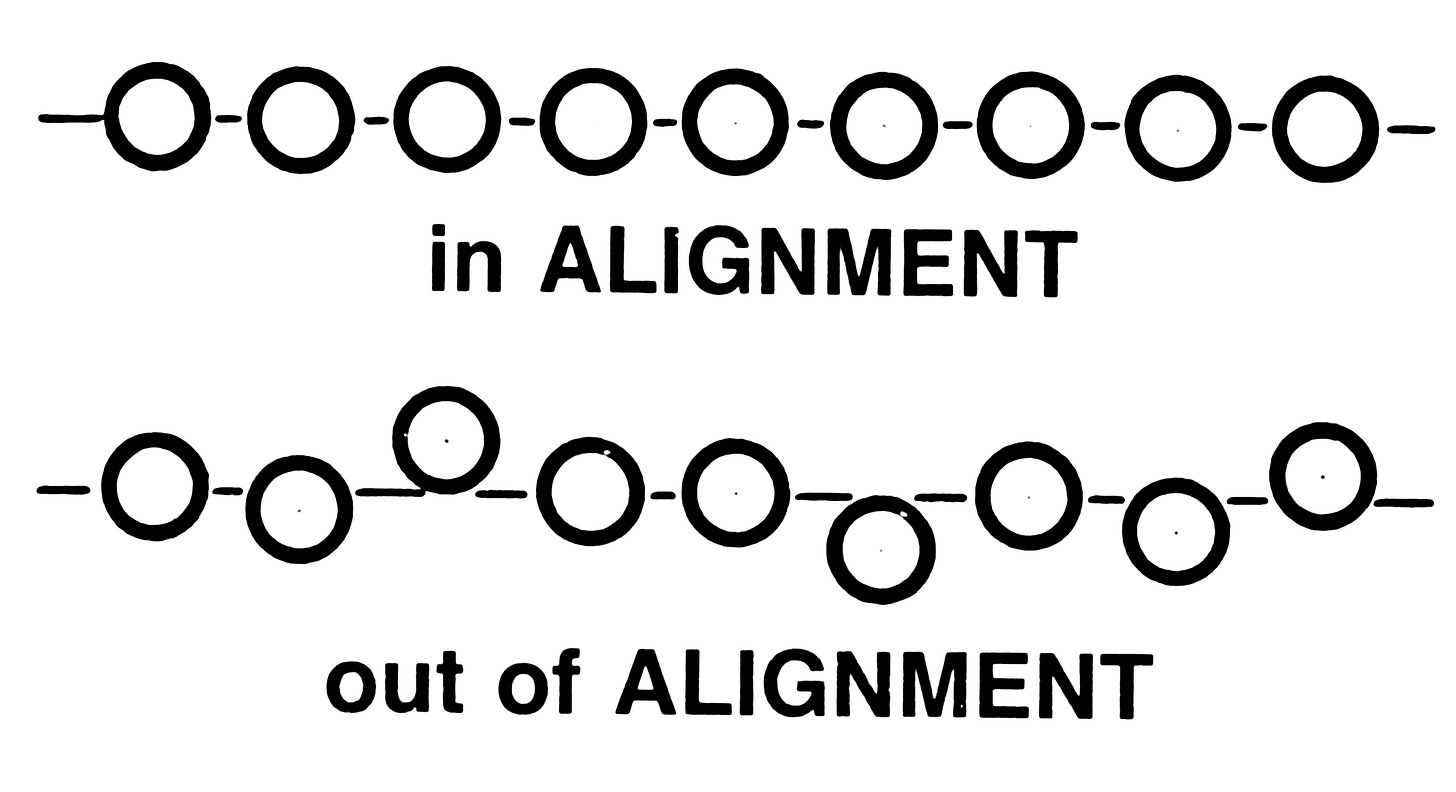

Alignment comes from old French, resulting from the combination of the prefix à (to, toward) and the word ligne (line), originally from the Latin linea for the word line. Thus, the verb aligner literally means to “arrange into a line” or “bring into line.”

If the developers of AI systems are to bring their creations into line, they first need to know what line to aim for. The fundamental problem with this is that no one agrees on what the line should be. What are these supposedly well-understood “human values” that these AI systems should act in accord with?

The main human value that AI safety practitioners seek to imbue in their AI systems is a vague desire to live on the part of humans. “Do not kill humans” is a good way to sum up these instructions.

Makes sense, right?

Or does it? When should these systems not kill humans? What does it mean for a human to be alive? Do the unborn count? How about the elderly? Or the sick and suffering?

If humanity cannot agree on so basic a thing as who is worthy of living, then surely we also cannot agree on what it means to live a good life. And if we do not agree on what is good, true, and beautiful, then how can we expect our creations to do so?